Generative artificial intelligence (GAI) is transforming the way organisations operate, driving efficiencies and improving productivity. However, the adoption and use of this technology is associated with complex legal and ethical challenges – particularly around the use of copyright-protected content, including published content, as prompts in GAI tools.

Research carried out this year by the Copyright Licensing Agency (CLA), involving nearly 4,000 UK organisations, found that 61 per cent of professionals already use generative AI at work, with four in five doing so on a weekly basis. More than three-quarters of these users said they upload or copy third-party materials into prompts.

As usage grows, so too does risk. Without appropriate permissions or licences in place, organisations will be exposed to legal and reputational risks arising from potential copyright infringement.

For responsible organisations seeking to navigate these challenges, good governance is a key consideration. Establishing a governance framework early on is therefore essential to ensure appropriate guardrails are in place to support employees, protect organisational information and ensure compliance with the law.

The value of published content in prompting generative AI tools

High-quality published content provides immense value when used to prompt generative AI tools. Professionals use GAI to save time by summarising reports, condensing lengthy documents and extracting key insights, allowing them to focus on higher-value tasks and make faster and better-informed decisions more efficiently.

According to CLA’s research, 83 per cent of GAI users believe the technology improves their efficiency, creativity and innovation. However, just as organisations routinely pay for access to and use of premium data, research and software to enhance their work, the use of published content to prompt GAI tools must equally be appropriately licensed and paid for.

As generative AI tools become more integrated into professional workflows, it is essential that organisations establish clear guidance on the use of published content for their staff. Despite this, only 38 per cent of GAI users surveyed by CLA expressed concern about inputting third-party content into prompts, revealing a significant awareness gap around copyright obligations.

Avoiding a ‘free-for-all’ mindset

One of the most significant areas of risk in workplace use of generative AI is the assumption that any material – whether organisational data or published content – can be freely entered into a GAI tool without consequence.

Robust governance is essential to give professionals confidence in using AI responsibly. This includes managing sensitive commercial, proprietary information carefully, understanding how GAI tools may use uploaded content and setting clear rules on how content can be used and which tools are approved for use.

A 2024 study by Harmonic Security found that nearly one in 10 prompts contained organisational data such as customer records, employee details and legal or financial data. This carries significant risk to an organisation, as such material could be incorporated into a tool’s training data and be used to generate outputs, constituting a potential data breach.

Copyright compliance is equally important when leveraging published content with GAI tools. Organisations must ensure they hold the appropriate licences to support such use and comply with licence terms when using the published content to mitigate the risks of copyright infringement.

Implementing governance frameworks in the workplace

Many organisations are still in the early stages of establishing governance frameworks for generative AI use in the workplace. CLA’s research found that only 60 per cent of professionals believe their organisation has a GAI policy in place. Of those who believed no policy existed, just 38 per cent were aware of any plans to develop one.

Now is a timely opportunity to strengthen understanding of the responsible use of generative AI. Implementing clear governance frameworks and supportive policies early can help organisations manage risk, encourage best practice and build a culture of informed and compliant use.

Key considerations when developing a generative AI workplace framework may include:

- Generative AI usage policies: Establish clear, enforceable guidelines on how GAI tools can be used within the organisation, specifying approved use cases, tools and any restrictions.

- Content governance and licensing: Specify what data and content may be used as prompts or inputs and ensure appropriate permissions and licences are in place to support the lawful use of third-party published content protected by copyright.

- Employee training and accountability: Provide regular training to educate and inform on responsible usage, ethical implications and copyright obligations.

- Tool evaluation and security: Assess and continually review the security credentials of GAI tools before authorising their implementation to ensure they meet organisational data and privacy requirements and that inputted content is not used to train underlying models.

- Monitoring and auditing: Track usage of GAI tools across the organisation to ensure compliance policies and identify and address risks early.

Licensing as a practical solution to support governance

Licensing offers a straightforward solution to help organisations use generative AI in the responsibly. CLA has recently added permissions to its business and select public sector licences, allowing lawful copying and use of content within CLA’s repertoire to prompt GAI tools in accordance with the licence terms and conditions.

These permissions ensure that the rights of creators and rightsholders are protected and that fair remuneration is received for the use of their works.

Through licensing, organisations can strengthen their governance frameworks, enabling responsible and lawful use of published content to prompt while benefiting from the potential of generative AI. This ensures that the benefits of generative AI are balanced with the rights of creators and rightsholders, fostering an ecosystem of ethical and sustainable innovation and growth, and helping the creative industries to continue to thrive – and innovate.

To find out more about what CLA is doing in the generative AI and copyright space, visit cla.co.uk/ai-and-copyright

Related and recommended

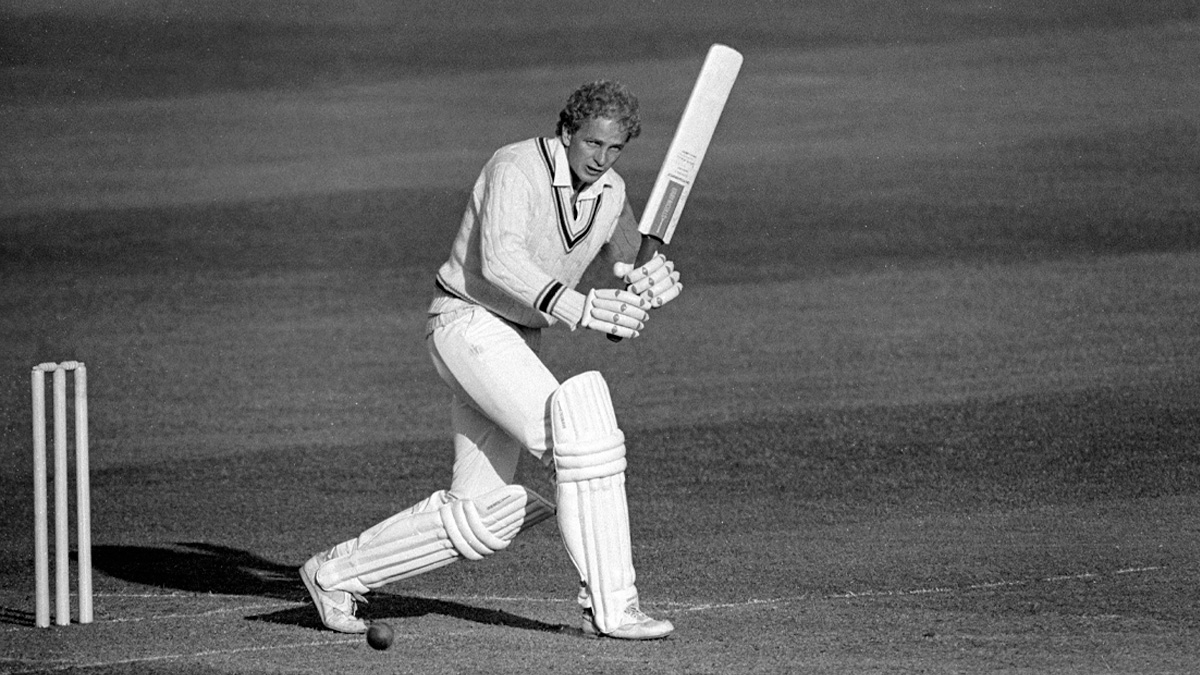

Spells of rare sporting brilliance show that finding intense concentration relies on achieving balance in your life

Rohan Blacker looks back at his time with e-commerce pioneer Sofa.com and explains the thinking behind his latest online furniture project

Rory Sutherland is one of the UK’s best-known marketing thinkers. He sets out why businesses should rethink how they value marketing, from direct mail to call centres and customer kindness

Leaders must realise the tech revolution can achieve its full potential only when human values remain central to change